Chapter 13 – Loading and Preprocessing Data with TensorFlow

This notebook contains all the sample code and solutions to the exercises in chapter 13.

Run in Google Colab Run in Google Colab

|

Setup

First, let’s import a few common modules, ensure MatplotLib plots figures inline and prepare a function to save the figures. We also check that Python 3.5 or later is installed (although Python 2.x may work, it is deprecated so we strongly recommend you use Python 3 instead), as well as Scikit-Learn ≥0.20 and TensorFlow ≥2.0.

# Python ≥3.5 is required

import sys

assert sys.version_info >= (3, 5)

# Scikit-Learn ≥0.20 is required

import sklearn

assert sklearn.__version__ >= "0.20"

try:

# %tensorflow_version only exists in Colab.

%tensorflow_version 2.x

!pip install -q -U tfx==0.15.0rc0

print("You can safely ignore the package incompatibility errors.")

except Exception:

pass

# TensorFlow ≥2.0 is required

import tensorflow as tf

from tensorflow import keras

assert tf.__version__ >= "2.0"

# Common imports

import numpy as np

import os

# to make this notebook's output stable across runs

np.random.seed(42)

# To plot pretty figures

%matplotlib inline

import matplotlib as mpl

import matplotlib.pyplot as plt

mpl.rc('axes', labelsize=14)

mpl.rc('xtick', labelsize=12)

mpl.rc('ytick', labelsize=12)

# Where to save the figures

PROJECT_ROOT_DIR = "."

CHAPTER_ID = "data"

IMAGES_PATH = os.path.join(PROJECT_ROOT_DIR, "images", CHAPTER_ID)

os.makedirs(IMAGES_PATH, exist_ok=True)

def save_fig(fig_id, tight_layout=True, fig_extension="png", resolution=300):

path = os.path.join(IMAGES_PATH, fig_id + "." + fig_extension)

print("Saving figure", fig_id)

if tight_layout:

plt.tight_layout()

plt.savefig(path, format=fig_extension, dpi=resolution)

Datasets

X = tf.range(10)

dataset = tf.data.Dataset.from_tensor_slices(X)

dataset

Equivalently:

dataset = tf.data.Dataset.range(10)

for item in dataset:

print(item)

dataset = dataset.repeat(3).batch(7)

for item in dataset:

print(item)

dataset = dataset.map(lambda x: x * 2)

for item in dataset:

print(item)

dataset = dataset.apply(tf.data.experimental.unbatch())

dataset = dataset.filter(lambda x: x < 10) # keep only items < 10

for item in dataset.take(3):

print(item)

dataset = tf.data.Dataset.range(10).repeat(3)

dataset = dataset.shuffle(buffer_size=3, seed=42).batch(7)

for item in dataset:

print(item)

Split the California dataset to multiple CSV files

Let’s start by loading and preparing the California housing dataset. We first load it, then split it into a training set, a validation set and a test set, and finally we scale it:

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

housing = fetch_california_housing()

X_train_full, X_test, y_train_full, y_test = train_test_split(

housing.data, housing.target.reshape(-1, 1), random_state=42)

X_train, X_valid, y_train, y_valid = train_test_split(

X_train_full, y_train_full, random_state=42)

scaler = StandardScaler()

scaler.fit(X_train)

X_mean = scaler.mean_

X_std = scaler.scale_

For a very large dataset that does not fit in memory, you will typically want to split it into many files first, then have TensorFlow read these files in parallel. To demonstrate this, let’s start by splitting the housing dataset and save it to 20 CSV files:

def save_to_multiple_csv_files(data, name_prefix, header=None, n_parts=10):

housing_dir = os.path.join("datasets", "housing")

os.makedirs(housing_dir, exist_ok=True)

path_format = os.path.join(housing_dir, "my_{}_{:02d}.csv")

filepaths = []

m = len(data)

for file_idx, row_indices in enumerate(np.array_split(np.arange(m), n_parts)):

part_csv = path_format.format(name_prefix, file_idx)

filepaths.append(part_csv)

with open(part_csv, "wt", encoding="utf-8") as f:

if header is not None:

f.write(header)

f.write("\n")

for row_idx in row_indices:

f.write(",".join([repr(col) for col in data[row_idx]]))

f.write("\n")

return filepaths

train_data = np.c_[X_train, y_train]

valid_data = np.c_[X_valid, y_valid]

test_data = np.c_[X_test, y_test]

header_cols = housing.feature_names + ["MedianHouseValue"]

header = ",".join(header_cols)

train_filepaths = save_to_multiple_csv_files(train_data, "train", header, n_parts=20)

valid_filepaths = save_to_multiple_csv_files(valid_data, "valid", header, n_parts=10)

test_filepaths = save_to_multiple_csv_files(test_data, "test", header, n_parts=10)

Okay, now let’s take a peek at the first few lines of one of these CSV files:

import pandas as pd

pd.read_csv(train_filepaths[0]).head()

Or in text mode:

with open(train_filepaths[0]) as f:

for i in range(5):

print(f.readline(), end="")

train_filepaths

Building an Input Pipeline

filepath_dataset = tf.data.Dataset.list_files(train_filepaths, seed=42)

for filepath in filepath_dataset:

print(filepath)

n_readers = 5

dataset = filepath_dataset.interleave(

lambda filepath: tf.data.TextLineDataset(filepath).skip(1),

cycle_length=n_readers)

for line in dataset.take(5):

print(line.numpy())

Notice that field 4 is interpreted as a string.

record_defaults=[0, np.nan, tf.constant(np.nan, dtype=tf.float64), "Hello", tf.constant([])]

parsed_fields = tf.io.decode_csv('1,2,3,4,5', record_defaults)

parsed_fields

Notice that all missing fields are replaced with their default value, when provided:

parsed_fields = tf.io.decode_csv(',,,,5', record_defaults)

parsed_fields

The 5th field is compulsory (since we provided tf.constant([]) as the “default value”), so we get an exception if we do not provide it:

try:

parsed_fields = tf.io.decode_csv(',,,,', record_defaults)

except tf.errors.InvalidArgumentError as ex:

print(ex)

The number of fields should match exactly the number of fields in the record_defaults:

try:

parsed_fields = tf.io.decode_csv('1,2,3,4,5,6,7', record_defaults)

except tf.errors.InvalidArgumentError as ex:

print(ex)

n_inputs = 8 # X_train.shape[-1]

@tf.function

def preprocess(line):

defs = [0.] * n_inputs + [tf.constant([], dtype=tf.float32)]

fields = tf.io.decode_csv(line, record_defaults=defs)

x = tf.stack(fields[:-1])

y = tf.stack(fields[-1:])

return (x - X_mean) / X_std, y

preprocess(b'4.2083,44.0,5.3232,0.9171,846.0,2.3370,37.47,-122.2,2.782')

def csv_reader_dataset(filepaths, repeat=1, n_readers=5,

n_read_threads=None, shuffle_buffer_size=10000,

n_parse_threads=5, batch_size=32):

dataset = tf.data.Dataset.list_files(filepaths).repeat(repeat)

dataset = dataset.interleave(

lambda filepath: tf.data.TextLineDataset(filepath).skip(1),

cycle_length=n_readers, num_parallel_calls=n_read_threads)

dataset = dataset.shuffle(shuffle_buffer_size)

dataset = dataset.map(preprocess, num_parallel_calls=n_parse_threads)

dataset = dataset.batch(batch_size)

return dataset.prefetch(1)

train_set = csv_reader_dataset(train_filepaths, batch_size=3)

for X_batch, y_batch in train_set.take(2):

print("X =", X_batch)

print("y =", y_batch)

print()

train_set = csv_reader_dataset(train_filepaths, repeat=None)

valid_set = csv_reader_dataset(valid_filepaths)

test_set = csv_reader_dataset(test_filepaths)

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=X_train.shape[1:]),

keras.layers.Dense(1),

])

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

batch_size = 32

model.fit(train_set, steps_per_epoch=len(X_train) // batch_size, epochs=10,

validation_data=valid_set)

model.evaluate(test_set, steps=len(X_test) // batch_size)

new_set = test_set.map(lambda X, y: X) # we could instead just pass test_set, Keras would ignore the labels

X_new = X_test

model.predict(new_set, steps=len(X_new) // batch_size)

optimizer = keras.optimizers.Nadam(lr=0.01)

loss_fn = keras.losses.mean_squared_error

n_epochs = 5

batch_size = 32

n_steps_per_epoch = len(X_train) // batch_size

total_steps = n_epochs * n_steps_per_epoch

global_step = 0

for X_batch, y_batch in train_set.take(total_steps):

global_step += 1

print("\rGlobal step {}/{}".format(global_step, total_steps), end="")

with tf.GradientTape() as tape:

y_pred = model(X_batch)

main_loss = tf.reduce_mean(loss_fn(y_batch, y_pred))

loss = tf.add_n([main_loss] + model.losses)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

optimizer = keras.optimizers.Nadam(lr=0.01)

loss_fn = keras.losses.mean_squared_error

@tf.function

def train(model, n_epochs, batch_size=32,

n_readers=5, n_read_threads=5, shuffle_buffer_size=10000, n_parse_threads=5):

train_set = csv_reader_dataset(train_filepaths, repeat=n_epochs, n_readers=n_readers,

n_read_threads=n_read_threads, shuffle_buffer_size=shuffle_buffer_size,

n_parse_threads=n_parse_threads, batch_size=batch_size)

n_steps_per_epoch = len(X_train) // batch_size

total_steps = n_epochs * n_steps_per_epoch

global_step = 0

for X_batch, y_batch in train_set.take(total_steps):

global_step += 1

if tf.equal(global_step % 100, 0):

tf.print("\rGlobal step", global_step, "/", total_steps)

with tf.GradientTape() as tape:

y_pred = model(X_batch)

main_loss = tf.reduce_mean(loss_fn(y_batch, y_pred))

loss = tf.add_n([main_loss] + model.losses)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train(model, 5)

Here is a short description of each method in the Dataset class:

for m in dir(tf.data.Dataset):

if not (m.startswith("_") or m.endswith("_")):

func = getattr(tf.data.Dataset, m)

if hasattr(func, "__doc__"):

print("● {:21s}{}".format(m + "()", func.__doc__.split("\n")[0]))

The TFRecord binary format

A TFRecord file is just a list of binary records. You can create one using a tf.io.TFRecordWriter:

with tf.io.TFRecordWriter("my_data.tfrecord") as f:

f.write(b"This is the first record")

f.write(b"And this is the second record")

And you can read it using a tf.data.TFRecordDataset:

filepaths = ["my_data.tfrecord"]

dataset = tf.data.TFRecordDataset(filepaths)

for item in dataset:

print(item)

You can read multiple TFRecord files with just one TFRecordDataset. By default it will read them one at a time, but if you set num_parallel_reads=3, it will read 3 at a time in parallel and interleave their records:

filepaths = ["my_test_{}.tfrecord".format(i) for i in range(5)]

for i, filepath in enumerate(filepaths):

with tf.io.TFRecordWriter(filepath) as f:

for j in range(3):

f.write("File {} record {}".format(i, j).encode("utf-8"))

dataset = tf.data.TFRecordDataset(filepaths, num_parallel_reads=3)

for item in dataset:

print(item)

options = tf.io.TFRecordOptions(compression_type="GZIP")

with tf.io.TFRecordWriter("my_compressed.tfrecord", options) as f:

f.write(b"This is the first record")

f.write(b"And this is the second record")

dataset = tf.data.TFRecordDataset(["my_compressed.tfrecord"],

compression_type="GZIP")

for item in dataset:

print(item)

A Brief Intro to Protocol Buffers

For this section you need to install protobuf. In general you will not have to do so when using TensorFlow, as it comes with functions to create and parse protocol buffers of type tf.train.Example, which are generally sufficient. However, in this section we will learn about protocol buffers by creating our own simple protobuf definition, so we need the protobuf compiler (protoc): we will use it to compile the protobuf definition to a Python module that we can then use in our code.

First let’s write a simple protobuf definition:

%%writefile person.proto

syntax = "proto3";

message Person {

string name = 1;

int32 id = 2;

repeated string email = 3;

}

And let’s compile it (the --descriptor_set_out and --include_imports options are only required for the tf.io.decode_proto() example below):

!protoc person.proto --python_out=. --descriptor_set_out=person.desc --include_imports

!ls person*

from person_pb2 import Person

person = Person(name="Al", id=123, email=["a@b.com"]) # create a Person

print(person) # display the Person

person.name # read a field

person.name = "Alice" # modify a field

person.email[0] # repeated fields can be accessed like arrays

person.email.append("c@d.com") # add an email address

s = person.SerializeToString() # serialize to a byte string

s

person2 = Person() # create a new Person

person2.ParseFromString(s) # parse the byte string (27 bytes)

person == person2 # now they are equal

Custom protobuf

In rare cases, you may want to parse a custom protobuf (like the one we just created) in TensorFlow. For this you can use the tf.io.decode_proto() function:

person_tf = tf.io.decode_proto(

bytes=s,

message_type="Person",

field_names=["name", "id", "email"],

output_types=[tf.string, tf.int32, tf.string],

descriptor_source="person.desc")

person_tf.values

For more details, see the tf.io.decode_proto() documentation.

TensorFlow Protobufs

Here is the definition of the tf.train.Example protobuf:

syntax = "proto3";

message BytesList { repeated bytes value = 1; }

message FloatList { repeated float value = 1 [packed = true]; }

message Int64List { repeated int64 value = 1 [packed = true]; }

message Feature {

oneof kind {

BytesList bytes_list = 1;

FloatList float_list = 2;

Int64List int64_list = 3;

}

};

message Features { map<string, Feature> feature = 1; };

message Example { Features features = 1; };

# WARNING: there's currently a bug preventing "from tensorflow.train import X"

# so we work around it by writing "X = tf.train.X"

#from tensorflow.train import BytesList, FloatList, Int64List

#from tensorflow.train import Feature, Features, Example

BytesList = tf.train.BytesList

FloatList = tf.train.FloatList

Int64List = tf.train.Int64List

Feature = tf.train.Feature

Features = tf.train.Features

Example = tf.train.Example

person_example = Example(

features=Features(

feature={

"name": Feature(bytes_list=BytesList(value=[b"Alice"])),

"id": Feature(int64_list=Int64List(value=[123])),

"emails": Feature(bytes_list=BytesList(value=[b"a@b.com", b"c@d.com"]))

}))

with tf.io.TFRecordWriter("my_contacts.tfrecord") as f:

f.write(person_example.SerializeToString())

feature_description = {

"name": tf.io.FixedLenFeature([], tf.string, default_value=""),

"id": tf.io.FixedLenFeature([], tf.int64, default_value=0),

"emails": tf.io.VarLenFeature(tf.string),

}

for serialized_example in tf.data.TFRecordDataset(["my_contacts.tfrecord"]):

parsed_example = tf.io.parse_single_example(serialized_example,

feature_description)

parsed_example

parsed_example

parsed_example["emails"].values[0]

tf.sparse.to_dense(parsed_example["emails"], default_value=b"")

parsed_example["emails"].values

Putting Images in TFRecords

from sklearn.datasets import load_sample_images

img = load_sample_images()["images"][0]

plt.imshow(img)

plt.axis("off")

plt.title("Original Image")

plt.show()

data = tf.io.encode_jpeg(img)

example_with_image = Example(features=Features(feature={

"image": Feature(bytes_list=BytesList(value=[data.numpy()]))}))

serialized_example = example_with_image.SerializeToString()

# then save to TFRecord

feature_description = { "image": tf.io.VarLenFeature(tf.string) }

example_with_image = tf.io.parse_single_example(serialized_example, feature_description)

decoded_img = tf.io.decode_jpeg(example_with_image["image"].values[0])

Or use decode_image() which supports BMP, GIF, JPEG and PNG formats:

decoded_img = tf.io.decode_image(example_with_image["image"].values[0])

plt.imshow(decoded_img)

plt.title("Decoded Image")

plt.axis("off")

plt.show()

Putting Tensors and Sparse Tensors in TFRecords

Tensors can be serialized and parsed easily using tf.io.serialize_tensor() and tf.io.parse_tensor():

t = tf.constant([[0., 1.], [2., 3.], [4., 5.]])

s = tf.io.serialize_tensor(t)

s

tf.io.parse_tensor(s, out_type=tf.float32)

serialized_sparse = tf.io.serialize_sparse(parsed_example["emails"])

serialized_sparse

BytesList(value=serialized_sparse.numpy())

dataset = tf.data.TFRecordDataset(["my_contacts.tfrecord"]).batch(10)

for serialized_examples in dataset:

parsed_examples = tf.io.parse_example(serialized_examples,

feature_description)

parsed_examples

Handling Sequential Data Using SequenceExample

syntax = "proto3";

message FeatureList { repeated Feature feature = 1; };

message FeatureLists { map<string, FeatureList> feature_list = 1; };

message SequenceExample {

Features context = 1;

FeatureLists feature_lists = 2;

};

# WARNING: there's currently a bug preventing "from tensorflow.train import X"

# so we work around it by writing "X = tf.train.X"

#from tensorflow.train import FeatureList, FeatureLists, SequenceExample

FeatureList = tf.train.FeatureList

FeatureLists = tf.train.FeatureLists

SequenceExample = tf.train.SequenceExample

context = Features(feature={

"author_id": Feature(int64_list=Int64List(value=[123])),

"title": Feature(bytes_list=BytesList(value=[b"A", b"desert", b"place", b"."])),

"pub_date": Feature(int64_list=Int64List(value=[1623, 12, 25]))

})

content = [["When", "shall", "we", "three", "meet", "again", "?"],

["In", "thunder", ",", "lightning", ",", "or", "in", "rain", "?"]]

comments = [["When", "the", "hurlyburly", "'s", "done", "."],

["When", "the", "battle", "'s", "lost", "and", "won", "."]]

def words_to_feature(words):

return Feature(bytes_list=BytesList(value=[word.encode("utf-8")

for word in words]))

content_features = [words_to_feature(sentence) for sentence in content]

comments_features = [words_to_feature(comment) for comment in comments]

sequence_example = SequenceExample(

context=context,

feature_lists=FeatureLists(feature_list={

"content": FeatureList(feature=content_features),

"comments": FeatureList(feature=comments_features)

}))

sequence_example

serialized_sequence_example = sequence_example.SerializeToString()

context_feature_descriptions = {

"author_id": tf.io.FixedLenFeature([], tf.int64, default_value=0),

"title": tf.io.VarLenFeature(tf.string),

"pub_date": tf.io.FixedLenFeature([3], tf.int64, default_value=[0, 0, 0]),

}

sequence_feature_descriptions = {

"content": tf.io.VarLenFeature(tf.string),

"comments": tf.io.VarLenFeature(tf.string),

}

parsed_context, parsed_feature_lists = tf.io.parse_single_sequence_example(

serialized_sequence_example, context_feature_descriptions,

sequence_feature_descriptions)

parsed_context

parsed_context["title"].values

parsed_feature_lists

print(tf.RaggedTensor.from_sparse(parsed_feature_lists["content"]))

The Features API

Let’s use the variant of the California housing dataset that we used in Chapter 2, since it contains categorical features and missing values:

import os

import tarfile

import urllib

DOWNLOAD_ROOT = "https://raw.githubusercontent.com/ageron/handson-ml2/master/"

HOUSING_PATH = os.path.join("datasets", "housing")

HOUSING_URL = DOWNLOAD_ROOT + "datasets/housing/housing.tgz"

def fetch_housing_data(housing_url=HOUSING_URL, housing_path=HOUSING_PATH):

os.makedirs(housing_path, exist_ok=True)

tgz_path = os.path.join(housing_path, "housing.tgz")

urllib.request.urlretrieve(housing_url, tgz_path)

housing_tgz = tarfile.open(tgz_path)

housing_tgz.extractall(path=housing_path)

housing_tgz.close()

fetch_housing_data()

import pandas as pd

def load_housing_data(housing_path=HOUSING_PATH):

csv_path = os.path.join(housing_path, "housing.csv")

return pd.read_csv(csv_path)

housing = load_housing_data()

housing.head()

housing_median_age = tf.feature_column.numeric_column("housing_median_age")

age_mean, age_std = X_mean[1], X_std[1] # The median age is column in 1

housing_median_age = tf.feature_column.numeric_column(

"housing_median_age", normalizer_fn=lambda x: (x - age_mean) / age_std)

median_income = tf.feature_column.numeric_column("median_income")

bucketized_income = tf.feature_column.bucketized_column(

median_income, boundaries=[1.5, 3., 4.5, 6.])

bucketized_income

ocean_prox_vocab = ['<1H OCEAN', 'INLAND', 'ISLAND', 'NEAR BAY', 'NEAR OCEAN']

ocean_proximity = tf.feature_column.categorical_column_with_vocabulary_list(

"ocean_proximity", ocean_prox_vocab)

ocean_proximity

# Just an example, it's not used later on

city_hash = tf.feature_column.categorical_column_with_hash_bucket(

"city", hash_bucket_size=1000)

city_hash

bucketized_age = tf.feature_column.bucketized_column(

housing_median_age, boundaries=[-1., -0.5, 0., 0.5, 1.]) # age was scaled

age_and_ocean_proximity = tf.feature_column.crossed_column(

[bucketized_age, ocean_proximity], hash_bucket_size=100)

latitude = tf.feature_column.numeric_column("latitude")

longitude = tf.feature_column.numeric_column("longitude")

bucketized_latitude = tf.feature_column.bucketized_column(

latitude, boundaries=list(np.linspace(32., 42., 20 - 1)))

bucketized_longitude = tf.feature_column.bucketized_column(

longitude, boundaries=list(np.linspace(-125., -114., 20 - 1)))

location = tf.feature_column.crossed_column(

[bucketized_latitude, bucketized_longitude], hash_bucket_size=1000)

ocean_proximity_one_hot = tf.feature_column.indicator_column(ocean_proximity)

ocean_proximity_embed = tf.feature_column.embedding_column(ocean_proximity,

dimension=2)

Using Feature Columns for Parsing

median_house_value = tf.feature_column.numeric_column("median_house_value")

columns = [housing_median_age, median_house_value]

feature_descriptions = tf.feature_column.make_parse_example_spec(columns)

feature_descriptions

with tf.io.TFRecordWriter("my_data_with_features.tfrecords") as f:

for x, y in zip(X_train[:, 1:2], y_train):

example = Example(features=Features(feature={

"housing_median_age": Feature(float_list=FloatList(value=[x])),

"median_house_value": Feature(float_list=FloatList(value=[y]))

}))

f.write(example.SerializeToString())

def parse_examples(serialized_examples):

examples = tf.io.parse_example(serialized_examples, feature_descriptions)

targets = examples.pop("median_house_value") # separate the targets

return examples, targets

batch_size = 32

dataset = tf.data.TFRecordDataset(["my_data_with_features.tfrecords"])

dataset = dataset.repeat().shuffle(10000).batch(batch_size).map(parse_examples)

Warning: the DenseFeatures layer currently does not work with the Functional API, see TF issue #27416. Hopefully this will be resolved before the final release of TF 2.0.

columns_without_target = columns[:-1]

model = keras.models.Sequential([

keras.layers.DenseFeatures(feature_columns=columns_without_target),

keras.layers.Dense(1)

])

model.compile(loss="mse",

optimizer=keras.optimizers.SGD(lr=1e-3),

metrics=["accuracy"])

model.fit(dataset, steps_per_epoch=len(X_train) // batch_size, epochs=5)

some_columns = [ocean_proximity_embed, bucketized_income]

dense_features = keras.layers.DenseFeatures(some_columns)

dense_features({

"ocean_proximity": [["NEAR OCEAN"], ["INLAND"], ["INLAND"]],

"median_income": [[3.], [7.2], [1.]]

})

TF Transform

try:

import tensorflow_transform as tft

def preprocess(inputs): # inputs is a batch of input features

median_age = inputs["housing_median_age"]

ocean_proximity = inputs["ocean_proximity"]

standardized_age = tft.scale_to_z_score(median_age - tft.mean(median_age))

ocean_proximity_id = tft.compute_and_apply_vocabulary(ocean_proximity)

return {

"standardized_median_age": standardized_age,

"ocean_proximity_id": ocean_proximity_id

}

except ImportError:

print("TF Transform is not installed. Try running: pip3 install -U tensorflow-transform")

TensorFlow Datasets

import tensorflow_datasets as tfds

datasets = tfds.load(name="mnist")

mnist_train, mnist_test = datasets["train"], datasets["test"]

print(tfds.list_builders())

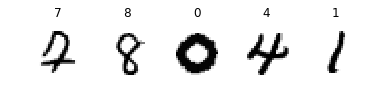

plt.figure(figsize=(6,3))

mnist_train = mnist_train.repeat(5).batch(32).prefetch(1)

for item in mnist_train:

images = item["image"]

labels = item["label"]

for index in range(5):

plt.subplot(1, 5, index + 1)

image = images[index, ..., 0]

label = labels[index].numpy()

plt.imshow(image, cmap="binary")

plt.title(label)

plt.axis("off")

break # just showing part of the first batch

datasets = tfds.load(name="mnist")

mnist_train, mnist_test = datasets["train"], datasets["test"]

mnist_train = mnist_train.repeat(5).batch(32)

mnist_train = mnist_train.map(lambda items: (items["image"], items["label"]))

mnist_train = mnist_train.prefetch(1)

for images, labels in mnist_train.take(1):

print(images.shape)

print(labels.numpy())

datasets = tfds.load(name="mnist", batch_size=32, as_supervised=True)

mnist_train = datasets["train"].repeat().prefetch(1)

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28, 1]),

keras.layers.Lambda(lambda images: tf.cast(images, tf.float32)),

keras.layers.Dense(10, activation="softmax")])

model.compile(loss="sparse_categorical_crossentropy",

optimizer=keras.optimizers.SGD(lr=1e-3),

metrics=["accuracy"])

model.fit(mnist_train, steps_per_epoch=60000 // 32, epochs=5)

TensorFlow Hub

import tensorflow_hub as hub

hub_layer = hub.KerasLayer("https://tfhub.dev/google/tf2-preview/nnlm-en-dim50/1",

output_shape=[50], input_shape=[], dtype=tf.string)

model = keras.Sequential()

model.add(hub_layer)

model.add(keras.layers.Dense(16, activation='relu'))

model.add(keras.layers.Dense(1, activation='sigmoid'))

model.summary()

sentences = tf.constant(["It was a great movie", "The actors were amazing"])

embeddings = hub_layer(sentences)

embeddings